Motivation

Its the same. It hasn’t changed: curses of dimensionality and history.

Goal: to solve decentralized multi-agent MDPs.

Key Insights

- macro-actions (MAs) to reduce computational complexity (like hierarchical planning)

- uses cross entropy to make infinite horizon problem tractable

Prior Approaches

- masked Monte Carlo search: heuristic based, no optimality garantees

- MCTS: poor performance

Direct Cross Entropy

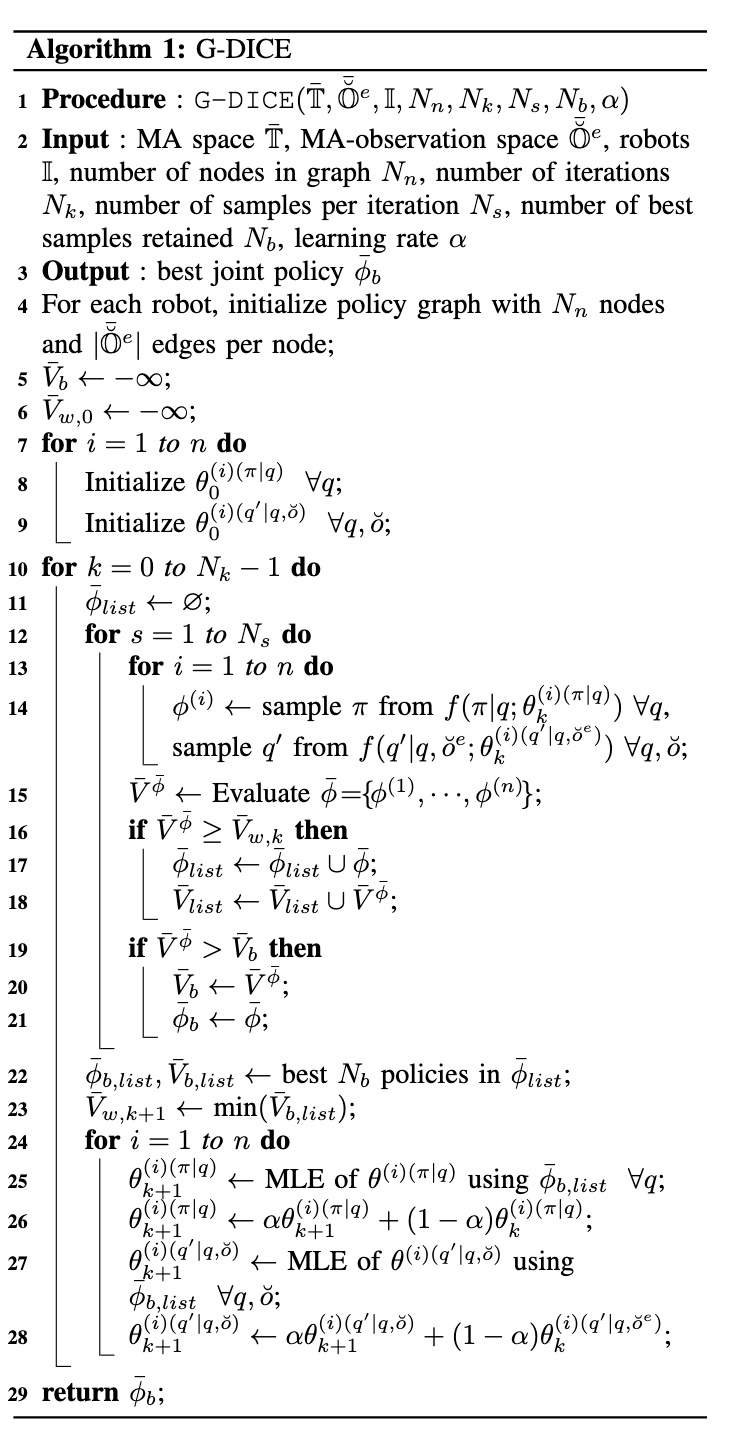

see also Cross Entropy Method

- sample a value function \(k\)

- takes \(n\) highest sampled values

- update parameter \(\theta\)

- resample until distribution convergence

- take the best sample \(x\)

G-DICE

- create a graph with exogenous \(N\) nodes, and \(O\) outgoing edges (designed before)

- use Direct Cross Entropy to solve for the best policy

Results

- demonstrates improved performance over MMCS and MCTS

- does not need robot communication

- garantees convergence for both finite and infiinte horizon

- can choose exogenous number of nodes in order to gain computational savings