What if our initial state never change or is deterministically changing? For instance, say, for localization. This should make solving a POMDP easier.

POMDP-lite

- \(X\) fully observable states

- \(\theta\) hidden parameter: finite amount of values \(\theta_{1 \dots N}\)

- where \(S = X \times \theta\)

we then assume conditional independence between \(x\) and \(\theta\). So: \(T = P(x’|\theta, x, a)\), where \(P(\theta’|\theta,x,a) = 1\) (“our hidden parameter is known or deterministically changing”)

Solving

Main Idea: if that’s the case, then we can split our models into a set of MDPs. Because \(\theta_{j}\) change deterministically, we can have a MDP solved ONLINE over \(X\) and \(T\) for each possible initial \(\theta\). Then, you just take the believe over \(\theta\) and sample over the MDPs based on that belief.

Reward bonus

To help coordination, we introduce a reward bonus

- exploration reward bonus, which encourages exploration (this helps coordinate)

- maintain a value \(\xi(b,x,a)\) which is the number of times b,x,a is visited—if it exceeds a number of times, clip reward bonus

Whereby:

\begin{equation} RB(b,s,a) = \beta \sum_{s’}^{} P(s’|b,s,a) || b_{s} - b ||_{1} \end{equation}

which encourages information gain by encouraging exploring states with more \(L_{1}\) divergence in belief compared to our current belief.

Then, we can formulate an augmented reward function \(\tilde{R}(b,s,a) = R(s,a) + RB(b,s,a)\).

Solution

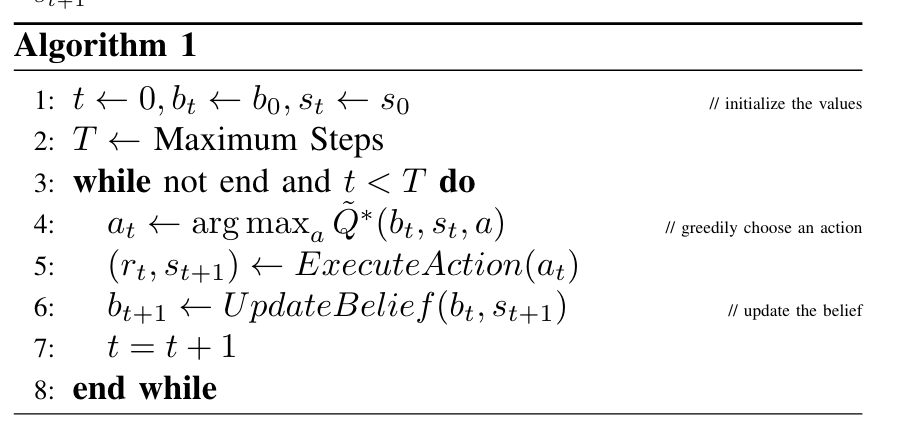

Finally, at each timestamp, we look at our observation and assume it does not change. This gives an MDP:

\begin{equation} \tilde{V}^{*} (b,s) = \max_{a} \left\{ \tilde{R}(b,s,a) + \gamma \sum_{s’}^{} P(s’|b,s,a) \tilde{V}^{*} (b,s’)\right\} \end{equation}

which we solve however we’d like. Authors used UCT.

UCT