Let’s say we want to know what is the chance of having an event occurring \(k\) times in a unit time, on average, this event happens at a rate of \(\lambda\) per unit time.

“What’s the probability that there are \(k\) earthquakes in the 1 year if there’s on average \(2\) earthquakes in 1 year?”

where:

- events have to be independent

- probability of sucess in each trial doesn’t vary

constituents

- $λ$—count of events per time

- \(X \sim Poi(\lambda)\)

requirements

the probability mass function:

\begin{equation} P(X=k) = e^{-\lambda} \frac{\lambda^{k}}{k!} \end{equation}

additional information

properties of poisson distribution

- expected value: \(\lambda\)

- variance: \(\lambda\)

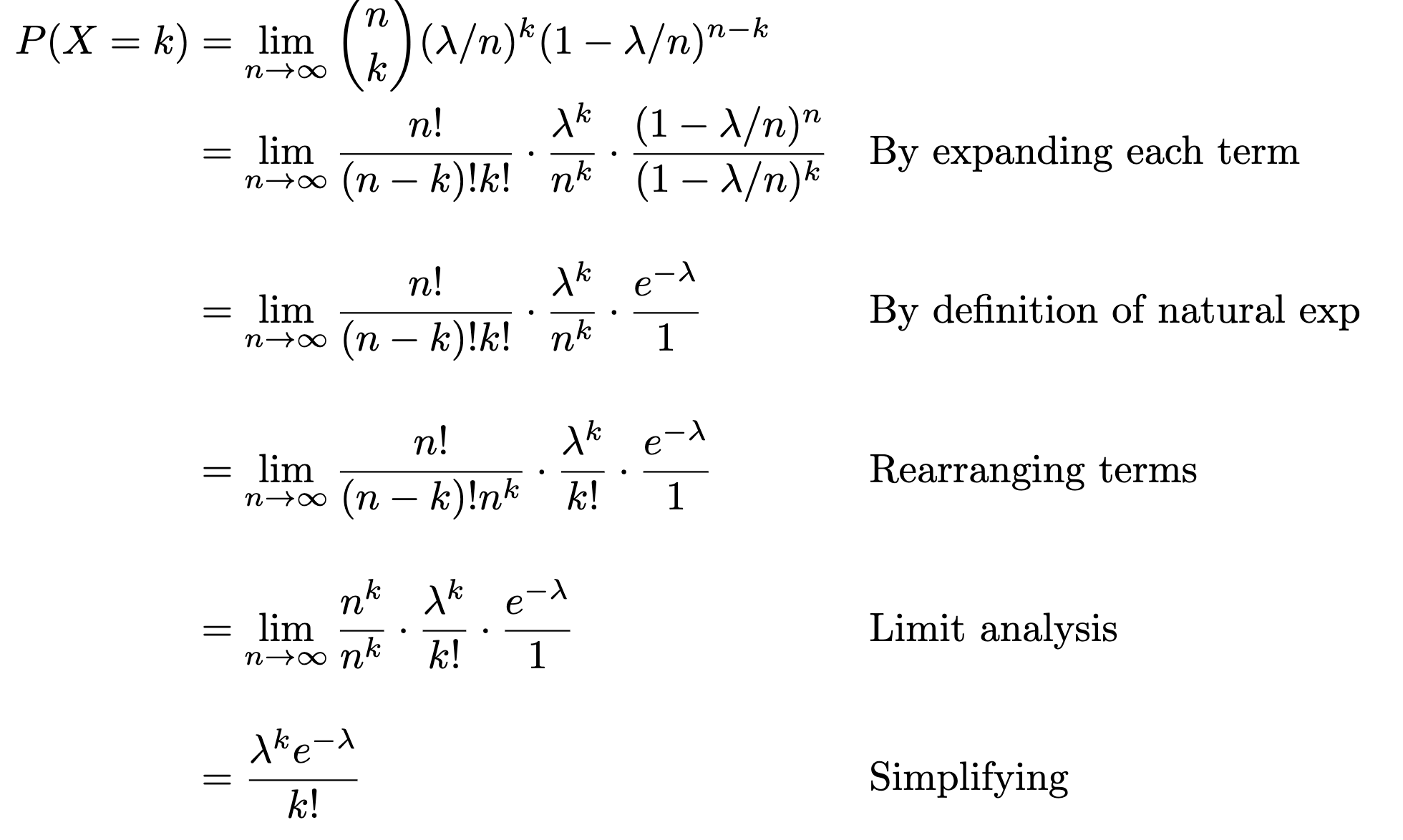

derivation

We divide the event into infinitely small buckets and plug into a binomial distribution, to formulate the question:

“what’s the probability of large \(n\) number samples getting \(k\) events with probability of \(\frac{\lambda}{n}\) of events”

\begin{equation} P(X=k) = \lim_{n \to \infty} {n \choose k} \qty(\frac{\lambda}{n})^{k}\qty(1- \frac{\lambda}{n})^{n-k} \end{equation}

and then do algebra.

And because of this, when you have a large \(n\) for your binomial distribution, you can just use a poisson distribution, where \(\lambda = np\).

adding poisson distribution

For independent \(A, B\)

\begin{equation} A+B \sim Poi(\lambda_{A}+ \lambda_{B}) \end{equation}

MLE for poisson distribution

\begin{equation} \lambda = \frac{1}{n} \sum_{i=1}^{n} x_{i} \end{equation}

yes, that’s just the sample mean